If you ask users what makes a modern data warehouse based on SAP HANA so special, they usually point out the great speed advantages. However, the implications of the in-memory approach are much more profound than they first appear, affecting the fundamental design and successful operation of the data warehouse. In the following, two main aspects are highlighted: first, the impact of redundancy-free data management on the concept of data delivery, and second, the possibility of using the conceptual data model as a basis for implementation.

Building a data warehouse with SAP HANA

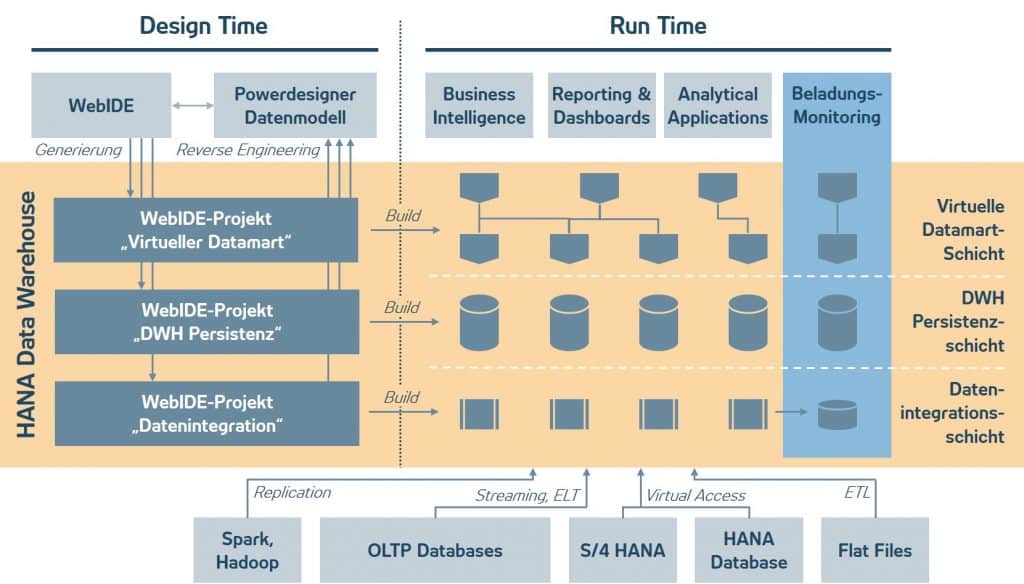

To illustrate the fundamental difference to the classic model, we will first discuss the structure of the modern DWH approach with SAP HANA.

Fig: Structure HANA Data Warehouse

- Persistence layer: There is only one persistent data storage layer. The data model of this layer is relational and oriented towards analytical evaluation requirements.

- Virtual Datamart Layer: All consumers of the HANA Data Warehouse are supplied from this layer. The persistence layer is accessed via views.

- Data integration layer: This layer is used to supply data to the HANA data warehouse. This is where the consistency check, loading and version generation of the data takes place.

Effects of redundancy-free data storage

Once data has been loaded into the persistence layer, it is immediately available to all buyers through the virtual datamart. The new architecture thus enables the department to access the data as quickly as possible. The decisive question from the perspective of the departments is then: Is the data complete and does it meet my data quality specifications? In contrast to the classic data warehouse, where initially the data marts were still loaded, in the HANA data warehouse there are no longer any internal processing processes in which it could be determined at what point in time the data is completely available for a particular customer and whether it meets all data quality requirements.

In the HANA Data Warehouse, completeness and correctness must therefore be determined using other methods and at a different location. Looking at the three layers, it becomes clear that the information on data availability must already be determined during the loading of the persistence layer in order to then make it available to all customers as part of a loading monitoring process.

There are two fundamentally different approaches to providing data to load monitoring. One option is to build mechanisms into each ETL route that provide their respective log information to the HANA data warehouse via appropriate interfaces. With this variant, implementation costs increase significantly if different ETL scenarios are to be used in parallel – such as batch deliveries and streaming technologies. The other option is to have the load logging done centrally by the data integration layer. Methods upstream of the actual loading procedures receive the data and log the loading status. This centralized approach has significant advantages in terms of maintainability and extensibility.

For the centralized approach, the question also arises of how to keep the implementation and operating costs of the upstream data integration layer as low as possible. After all, the upstream methods for dozens of tables have to be created in the HANA data warehouse. If this is designed and implemented by hand, then it means a considerable amount of work – especially if the data warehouse is expanded at a later date.

Linking of conceptual to physical data model

Strictly speaking, all the information required for load control is already contained in the data model of the data warehouse. Thus, it is possible to automate development processes already in the implementation phase of the HANA data warehouse (i.e., at design time), and to generate the data integration layer, for example.

SAP HANA 2.0 includes SAP Enterprise Architecture Designer as a component, which is an integration of SAP PowerDesigner into the HANA development environment. This creates the opportunity to model the conceptual properties of the HANA data warehouse in a central data model, and to use the generation capabilities of SAP Powerdesigner to automate the actual implementation of HANA artifacts. With this approach, efforts and redundancies can be avoided both for the implementation and especially for the documentation.

SAP uses this approach to create the persistence layer for the SAP HANA Financial Services Data Platform (FSDP). The customer can extend the data model (also in Powerdesigner), and the same generation methods are used to manifest these extensions in the persistence layer. This principle can also be applied to other data models outside of Financial Services, both for industry-specific data models and for a customer-specific approach.

Further above, it has already been shown how this approach can be applied to the generation of structures in the data integration layer. The question arises to what extent a link to the Datamart layer is also possible.

The task of the Datamart layer is to transform the data model of the data warehouse for reporting, and to enrich derived information, e.g. key figures. This is where the generation principle reaches its limits. However, one can conversely read the implementation of the datamart layer and store it in a certain “flight level” in a conceptual data model. This is therefore reverse engineering. The goal is to be able to use the data model as a central reference for the data lineage in reporting. It should be noted that reverse engineering can only function comprehensively if the relevant information is already available in a structured form in the implemented objects. Precise developer guidelines and naming conventions are therefore a prerequisite for transferring all information into the central data model.

Summary

In summary, it can be said that even during the preliminary considerations for a modern data warehouse with SAP HANA, attention should be paid to which approach is best suited to in-memory data management. With the right methods and the right toolbox, it is possible to significantly reduce implementation efforts, both during introduction and during subsequent enhancements, while at the same time ensuring that the documentation in the central data model is fundamentally up-to-date. This is the basis for successful data governance in the long term.

Jan Kristof has more than 15 years of experience in SAP Bank Analyzer and SAP BW with a focus on architecture consulting. Currently, his focus is on data modeling and data processing with SAP Bank Analyzer and SAP HANA. Most recently, Jan Kristof took a central role in the development of the business content for SAP Bank Analyzer. As a developer, he is an expert in SAP NetWeaver, ABAP, ABAP Objects and SAP HANA.

0 Comments