The new central data system for the banking and insurance sector by SAP is FSDP, the Financial Services Data Platform. It is meant to constitute the central analytical platform in a financial services IT architecture. For this it uses the state-of-the-art in-memory database from SAP, HANA. HANA was developed specially for analytical purposes.

Prior to SAP FSDP, SAP Bank Analyzer took on the role as a central analytic platform. But its end-of-life is soon to come with support ending in 2025.

When establishing FSDP as a future-proof solution, either as a new customer or migrating from Bank Analyzer, financial service companies are faced with the challenge of integrating this new platform into their overall architecture. One of the first and main challenges to be solved is the loading of data from source systems to SAP FSDP.

This article gives an introduction to solving this ETL problem with event streaming using Apache Kafka to build a reliable and scalable real-time data integration. It provides background knowledge about Apache Kafka, and afterwards discusses its usage for streaming ETL and the implementation of ADWEKO CSF (Configurable Streaming ETL Framework for FSDP), ADWEKO’s framework that was implemented for this purpose. The next article in this two-part series will discuss specific challenges that can occur when combining Kafka and FSDP and how they are solved in ADWEKO CSF. It also establishes best practices for testing such a streaming ETL system.

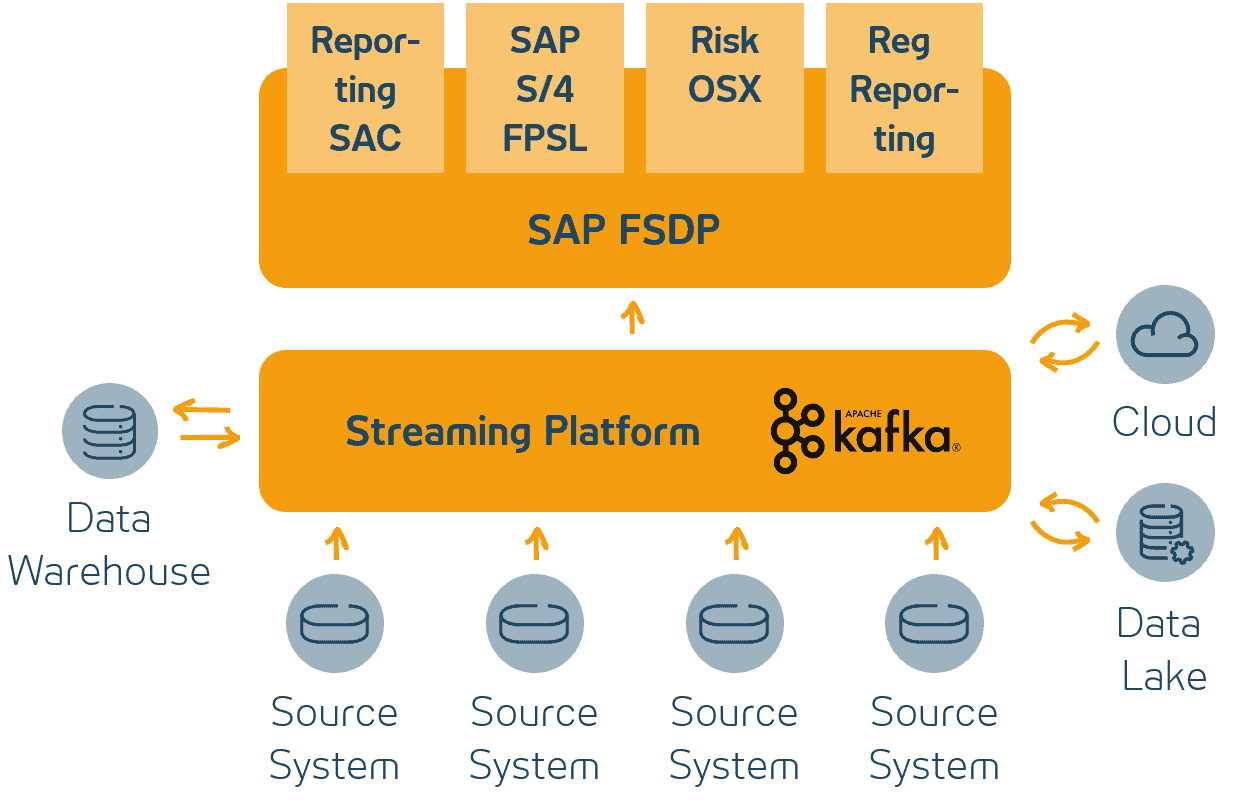

Figure 1: Overview target architecture

Why Streaming ETL with Kafka?

Apache Kafka is the state-of-the-art integration platform of choice that can act as a central nervous system for a company’s data. Already more than 80 % of the Fortune 100 companies use it, including 7 of 10 banking and 10 of 10 insurance companies. It is a scalable, reliable, highly available platform that can achieve a high throughput.

ETL based on daily batch processes enables only to observe the organization’s status of yesterday. Streaming ETL, opposed to this, provides the capabilities to load data in real time into the target systems. Therefore, companies can get insights into the current status of the organization in their analytical systems.

For realizing real-time ETL processes, the open source streaming platform Apache Kafka is a perfect fit. In its core, you can think of Kafka as a partitioned commit log on multiple distributed Kafka brokers. Incoming messages are saved in so called topics in the order in which they arrive. Other applications can read data from these topics sequentially. Therefore, applications using Kafka are decoupled from each other and only communicate using these Kafka topics. Because the messages can be persisted in Kafka, messages that are written faster than they are read can later be retrieved and processed.

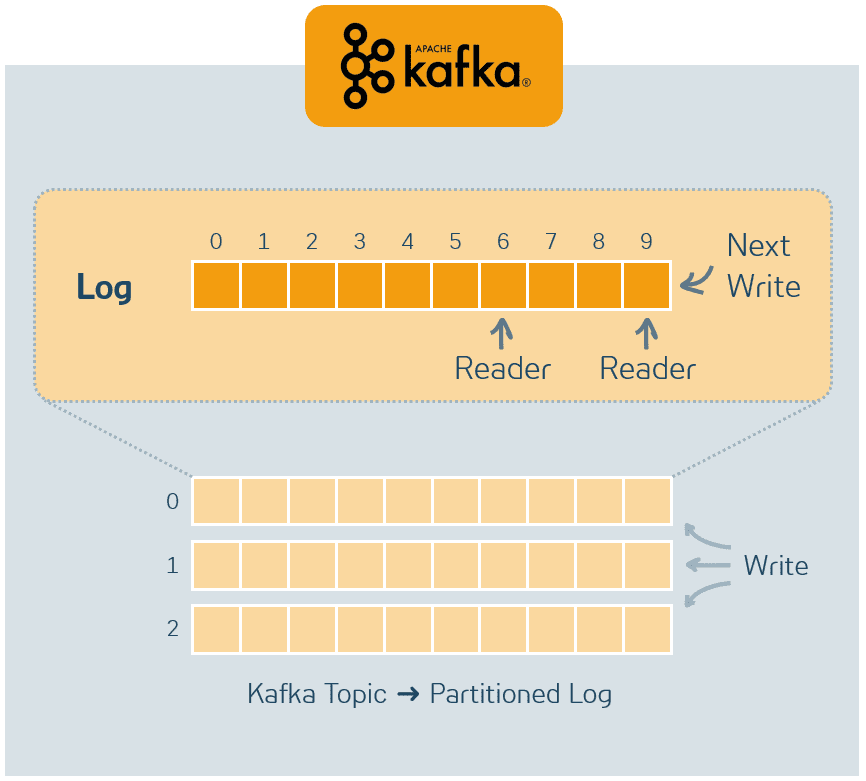

Figure 2: Kafka topic and partitioning

Figure 2 visualizes this concept. Every square represents a message. The writing application, which is called “producer” in Kafka terminology, always appends messages at the end of a topic’s partition. At the top of the figure, a partition with two reading applications, called Kafka “consumers”, is shown. One consumer has reached message number six. The other one has already read all messages of the partition and therefore is at message nine. Each message is uniquely identified by the combination of which topic it is in, on which partition it is, and the offset on its partition. Consumers manage their state independent from each other via their Kafka consumer offset. Their state is persisted in a dedicated Kafka topic, enabling consumers to continue consuming where they left off after restarts or system failures.

Kafka’s partitioning feature can be used to increase message throughput. This is possible by enabling a parallel processing of the split data by multiple application instances. The durability of messages in Kafka can be set by the user. Therefore, messages can potentially be saved in Kafka for an arbitrary long time, and Kafka can be used as a long-term data store.

Advantages of Kafka are high scalability and high message throughput at low latency. This can be provided thanks to the ability to increase cluster size to the necessary dimension with new Kafka brokers. It also provides high availability as topics can be replicated in multiple Kafka brokers.

Kafka Streams

Kafka Streams is the stream processing framework included in Kafka. It interprets messages in a topic as a stream of data that is processed continuously. Using Kafka Streams, transformations of these messages can be defined and transformed messages can again be written to Kafka topics.

Especially in the context of financial services with high-value data, message processing guarantees are one of the most important features an ETL framework needs to offer.

With Kafka Streams, “exactly-once” message processing guarantees can be enabled via a simple configuration option making sure each message is only processed exactly once with regard to Kafka. Under the hood, Kafka Streams leverages Kafka transactions and the idempotent Kafka Producer to implement this functionality.

Streaming ETL to SAP FSDP?

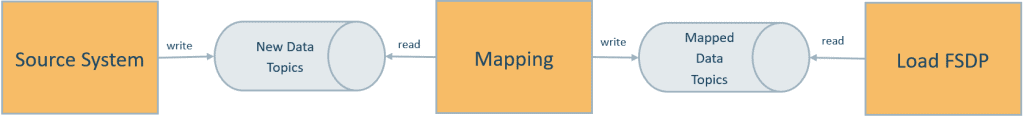

To enable companies to leverage the advantages resulting from the combination of streaming ETL based on Kafka and the mature analytical platform SAP FSDP, ADWEKO has developed CSF as a configurable ETL framework for FSDP based on Kafka and Kafka Streams. The core architecture of CSF is visualized in figure 3. The system is separated into three loosely coupled steps which each have own applications that are connected by two Kafka topics:

- data extraction from the source system

- mapping of the data from source to target format

- load to FSDP

The data format of messages in Kafka is configurable, it is possible to use JSON or Avro.

Figure 3: CSF architecture

Data Extraction Source System

Before data can be processed using Kafka, it first needs to be extracted from the source systems. Kafka provides different options for this purpose. Using Kafka Connect, a so-called source connector for data bases can be used to extract data from them and write it to Kafka. Connectors for many commonly used database systems are already available online, and it is possible to write new connectors which connect other databases. Also, a source system can be connected to Kafka using Kafka Streams or a Kafka producer. In source systems using a Kafka-based microservice architecture, data is already available in Kafka. Therefore it is ready to be used as a starting point for the ETL process to SAP FSDP.

There are also multiple options on how the data is made available in Kafka. The complete business objects can be extracted directly by e.g. using a JDBC source connector. Another way is to use change data captures (CDCs) of the source systems and writing them to Kafka, where the objects are rebuilt. This can for example be done by using Oracle Golden Gate or Debezium as a Kafka Connect source. These two approaches take the data from the source systems in a non-invasive way without any need to change the source system.

Mapping

The mapping component of CSF processes messages arriving in Kafka using Kafka Streams and afterwards writes the result to a different output topic. The mapping is done using the Java library Jolt, which enables the definition of transformations of messages in a JSON file. Transformations like renaming fields names, concatenating values and many more are already available there. If more specific transformations are required, user-defined functions can be created allowing arbitrary message transformations. For the mapping component, this feature was already used to define a metadata mapping function. It can do a mapping of a field with values specified in a JSON file.

Load to SAP FSDP

This section gives an overview of how to load data from Kafka into FSDP.

Options for Load

After data is transformed into the required format, there are multiple options of how to insert it into FSDP. Existing Kafka connectors can be used, or a specific implementation for loading data into FSDP.

The two existing Kafka Connect connectors, the JDBC connector or the SAP HANA connector, can insert data directly using JDBC. These connectors do not support exactly once loading, so to ensure that the data arrives in the target tables without duplicates or data loss, they would have to be modified. This can be done by using database transactions and tracking Kafka offsets additionally in the target database for being able to retrieve the current state after the application crashed while executing an insert statement.

The other way is to implement a Kafka Streams application, Kafka Connect sink connector or Kafka consumer specially for loading data to SAP FSDP. Several options for doing so are available:

- Using load procedures that directly insert single rows into the target tables

- Using load procedures that directly insert multiple rows into the target tables (which can only be done using temporary tables as input, since table type input parameters for procedures are not supported by the HANA JDBC implementation)

- Inserting multiple rows combined in a mini batch directly into the target tables via an insert statement

- Inserting mini batches into staging tables and using load procedures to copy the data into the target tables

For SAP FSDP, the staging area approach has the advantage that a load procedure, which copies data from the staging tables to the target tables, can check the data, historize old data, and remove duplicates. Also, the staging tables and the tables used for load management by the load procedures can be used for monitoring and retrieving information on loads into SAP FSDP. It is also possible to use ADWEKO data warehouse manager for SAP HANA as staging layer, providing capability to for additional data quality management and the definition of data quality checks.

CSF Implementation

CSF ensures exactly-once guarantees beyond Kafka by leveraging idempotent HANA load procedures to ignore duplicates and the Kafka at-least-once processing guarantee. High throughput and real time loading are ensured by using dynamically sized mini batches. Also, insertion of data lineage information associated with the Kafka messages and linking it to the data in FSDP is handled.

A Kafka consumer inserts data into a staging area using JDBC. Dynamically sized micro batches are inserted to optimize write performance, because single inserts to HANA are relatively slow in comparison. The batch size is determined by the number of arriving messages and a configurable maximum value. The batch is inserted into the staging tables if the configured maximum batch size is reached or no more messages are available in Kafka. Thus, if a lower number of messages arrives, batches will be smaller, so that real time loading can be ensured. As the number of arriving messages increases, the batch size also increases for being able to provide a high throughput.

After a mini batch is loaded into the staging tables, data is copied into the target tables using load procedures that also exclude duplicates. Only after the load procedures ran successfully, message offsets are committed to Kafka. The combination of the duplicate elimination and the at-least-once processing in Kafka provides exactly once delivery to SAP FSDP, making sure all messages arrive in SAP FSDP and no messages are lost in the process. If it is not possible to insert data, for example because of a connection problem, the application waits until the connection can be restored, reconnects, and continues where it started.

Jan Berberich is an experienced Java developer and data engineer. He is an expert in data integration using Apache Kafka, especially for SAP HANA and other SAP Applications.

0 Kommentare