Introduction

The world’s most valuable resource is no longer oil, but data.

THE ECONOMIST

The title of an article in the The Economist of May 2017 illustrates the importance of data in today’s world. Originally, this metaphor was used in the context of cartel structures of large digital companies -similar to those in the oil industry. In the meantime, however, the paradigm shift with regard to the “most valuable resource in the world” has become an integral part of entrepreneurial activity in all industries and sectors of the economy.

This high value of data arises primarily when it has a certain quality. This is where IT-supported data quality management comes in.

Below, this white paper will define key terms as well as address why good data quality is necessary, measurable and achievable. In this context, ADWEKO also provides insights into possible fields of action and successful project examples.

[1] https://www.economist.com/leaders/2017/05/06/the-worlds-most-valuable-resource-is-no-longer-oil-but-data

2. data quality management at banks

2.1 Definitions

Data Quality describes the correctness, relevance, and reliability of data, depending on the purpose the data is intended to serve in a particular context.[1]

Data Quality Management refers to all measures that enable an asset-oriented view, control and quality assurance of data in a company. Data quality management is a sub-discipline of a holistic data governance strategy.[2]

[1] https://www.haufe-akademie.de/blog/themen/controlling/datenqualitaetsmanagement-engl-data-quality-management/

[2] https://www.haufe-akademie.de/blog/themen/controlling/datenqualitaetsmanagement-engl-data-quality-management/

2.2 Value contribution from risk aspects

Not only since the Basel Committee on Banking Supervision firmly anchored the “Principles for the Effective Aggregation of Risk Data and Risk Reporting” (BCBS239) in the regulatory framework in 2013, there are many reasons to ensure good data quality in the company.

On the one hand, an evolved IT infrastructure, a large number of manual interventions and lengthy processes have a negative impact on data quality in the company. On the other hand, there is potential here to reduce RTB and CTB costs to leverage synergy potential between various bank management areas, and to increase agility and speed.

In fact, good data quality can still open up new subject areas. These include automation topics such as various forms of digitalization through Robotic Process Automation, a better understanding of customer needs and, last but not least, Artificial Intelligence (AI)[1].

Overall, it can be stated that companies with a high level of data quality are better able to cover both existing and new processes/business areas and can thus cut costs and generate new revenues . Banks can also better assess their own risks and take countermeasures at an earlier stage. As a consequence, a collapse of the institution and a cascade on the economy and society can be prevented – BCBS239 for all, so to speak.

[1] Without sufficient data quality, an AI cannot be learned.

2.3.1 Analysis of the status quo

To measure and improve data quality in a meaningful way, an analysis of the status quo is essential. Here, it is recommended to conduct an inventory together with a company-wide BI group, data owners/stewards and system experts, for example in the form of workshops. In this context, evaluations of DQ problems that have occurred from incident management tools, such as SAP Solution Manger or ServiceNow, can make a valuable contribution to analyzing the status quo. Based on the results, initial essay points can be identified in order to

- Improve existing DQ actions and data models, and

- to set up new DQ exams.

After successful analysis of the current status and technical outlining of possible new requirements, the technical implementation can finally take place.

2.3.2 Measurement

2.3.2.1 Data quality dimensions

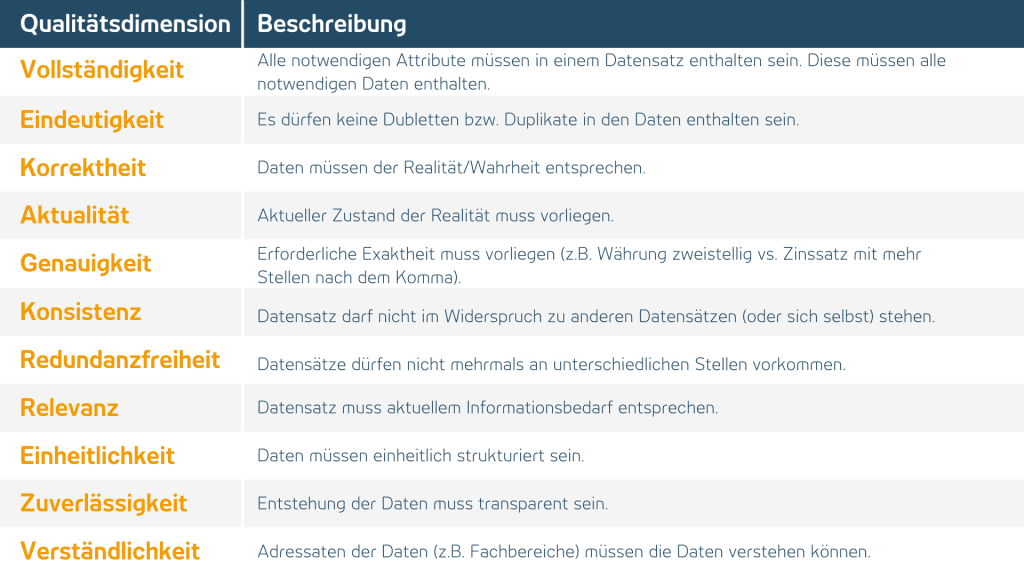

In order to evaluate and control the quality of a product (in this case data), it must be measurable. A number of quality dimensions have emerged in relation to data quality, which are briefly described below.[1]

[1] The measurement of each dimension is well illustrated in the following link:Measuring Data Quality: Quantifying Data Quality with 11 Criteria (business-information-excellence.de)

2.3.2.2 Example Friday Afternoon Measurement

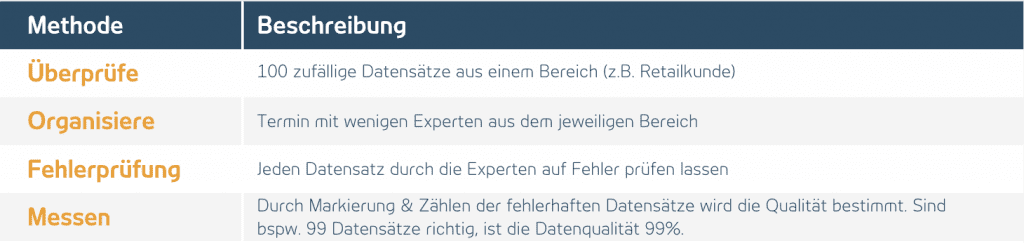

A quick and straightforward method for measuring data quality is provided by Thomas C. Redman’s “Friday Afternoon Measurement”[1]. This is broken down into four steps:

2.3.2.2 Challenges

So data quality can be measured in many ways. However, some difficulties arise in the measurement:

- Ongoing process necessary –

It is not enough to check all data once (which is already very time-consuming). Rather, data quality must be continuously reviewed. - Data volume is constantly increasing –

Due to new regulatory requirements (e.g. Ana-Credit or Finrep reporting) and the possibilities of Big Data / Data Analytics, the amount of data to be audited is constantly increasing - Near Time Reporting –

The need for data that is as up-to-date as possible requires fast data processing. This leaves significantly less time for time-consuming data quality checks.

3. possible project procedure for the creation of new data quality checks

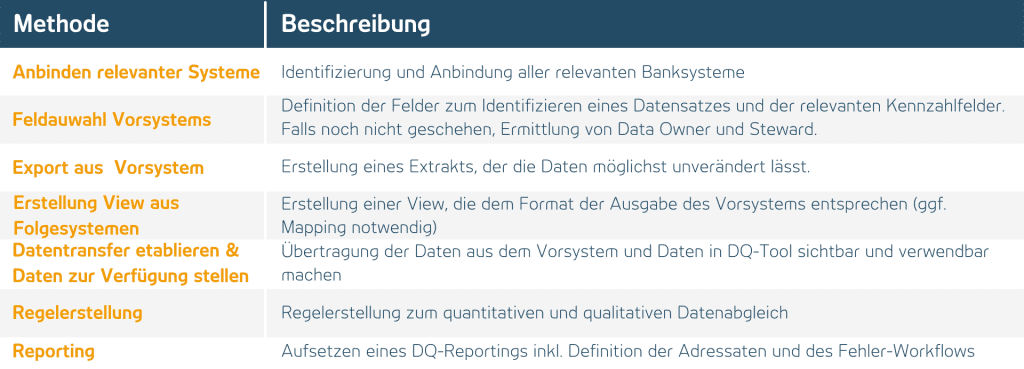

There are some basic steps necessary to recreate DQ test rules. These are shown below:

3.1 References

At ADWEKO we offer you experts with many years of experience and expertise in the implementation of DQ measures at banks.

3.1.1 Project example 1

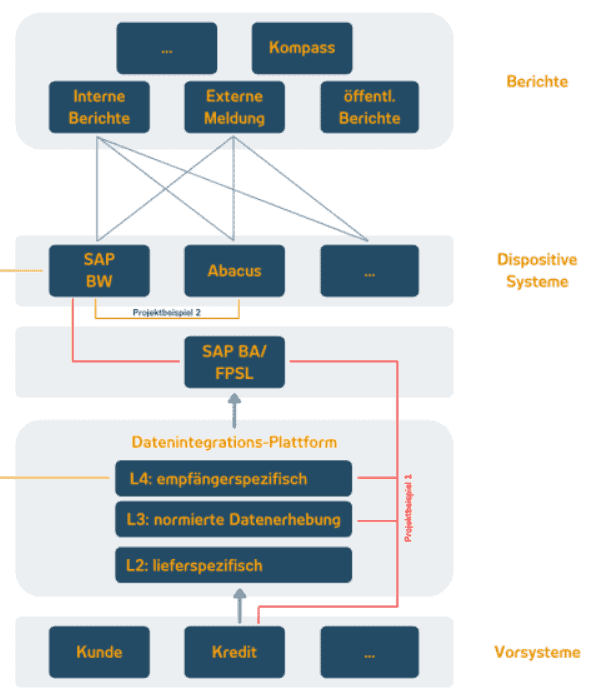

Implementation of automated data quality rules to check consistency and completeness across the retail loan supply chain – As part of the implementation of a new retail loan system, existing loan portfolios from the previous loan process were migrated alongside new business. To measure and verify data quality, our ADWEKO consultants have implemented automated system transition checks via the IFRS delivery route. The goal was to reconcile all relevant AFI metrics by amount across the delivery route (loan upstream system, data integration platform, SAP BA, SAP BW) using separate DQ rules. The AFI metrics denote the ratios btw. Facts per single transaction, which are posted in the sub-ledger in the course of the AFI processing in terms of amounts in the sense of double-entry bookkeeping for any business transactions and are extracted to the SAP BW at the end of the day.

3.1.2 Project example 2

Establishment of uniform Group-wide reconciliation and plausibility rules for defined key risk measures (KRMs).

The determination of the various KRMs or even different functional variants of a KRM is based on a complex and heterogeneous IT system landscape. This can potentially lead to incongruities between different systems that are not technical but technical in origin, even if the technical definition is uniform. The aim of the DQ checks to be implemented was therefore to validate data from the areas of risk/financial controlling, accounting, and reporting via the various IT systems in terms of amounts. For this purpose, we implemented data quality rules at our customer in the form of automated end-to-end reconciliations to ensure the consistency of data between dispositive systems SAP BW and ABACUS.

4. ADWEKO fields of action

We support you in setting up and improving automated data quality rules across the bank-wide IT supply chain as well as in selecting and implementing DQ and MDM tools.

To ensure that the tool conforms to the bank-wide architecture and infrastructure, technical criteria are primarily relevant to the BI tool suite selection process. In addition to these technical criteria, however, business requirements and functional criteria (e.g., reporting options, standard and ad hoc reporting, user experience, drill functions, or authorization structure) as well as non-functional criteria (such as training requirements, vendor support, release cycles, and costs) must also be included. Modern data management systems offer numerous options for implementing comprehensive data quality management.

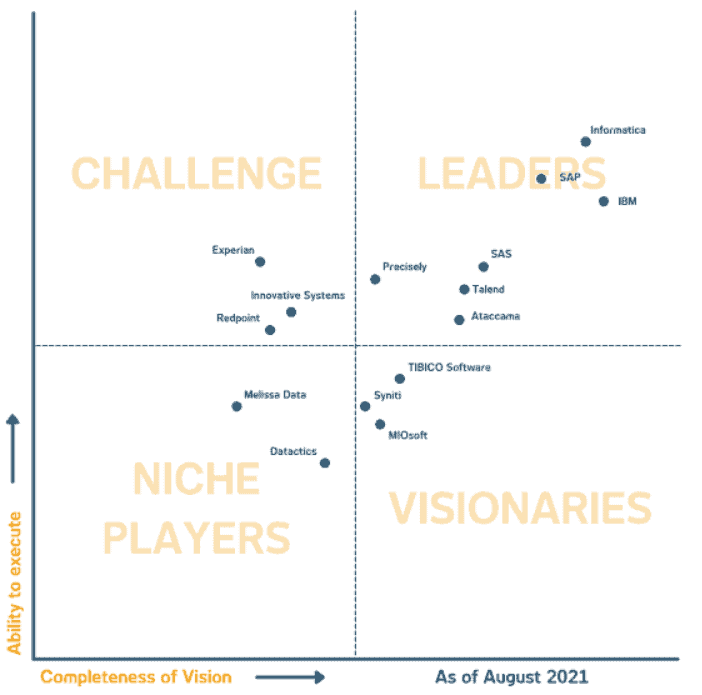

The Gartner Magic Quadrant shows Informatica, SAP and IBM as leading tool vendors in September 2021. However, a large number of other providers can be found on the market and selected depending on the use case.

Conclusion

Data is a key asset of any financial institution. The amount of data to be processed is constantly increasing. As a result, the relevance of uniform data and data quality management is increasing. This is also reflected, for example, in the supervisory requirements in the BCBS 239 framework, which calls for precisely this uniform approach for all risk types. Such data quality management can only be ensured efficiently by means of appropriate IT support. Many banks’ current data quality processes focus on incident-based data quality audits. However, to benefit meaningfully from data quality measurement, the current process and system infrastructure should be fully validated and enhanced through data quality testing. In this context, ADWEKO develops individual solutions together with customers using state-of-the-art BI tools.

Tobias Scholz

Managing Consutlant at ADWEKO

0 Comments